Abstract

It has long been debated how humans resolve fine details and perceive a stable visual world despite the incessant fixational motion of their eyes. Current theories assume these processes to rely solely on the visual input to the retina, without contributions from motor and/or proprioceptive sources. Here we show that contrary to this widespread assumption, the visual system has access to high-resolution extra-retinal knowledge of fixational eye motion and uses it to deduce spatial relations. Building on recent advances in gaze-contingent display control, we created a spatial discrimination task in which the stimulus configuration was entirely determined by oculomotor activity. Our results show that humans correctly infer geometrical relations in the absence of spatial information on the retina and accurately combine high-resolution extraretinal monitoring of gaze displacement with retinal signals. These findings reveal a sensory-motor strategy for encoding space, in which fine oculomotor knowledge is used to interpret the fixational input to the retina.

Similar content being viewed by others

Introduction

Our eyes are never at rest. Since fine visual resolution is restricted to a tiny portion of the retina, the fovea, humans use eye movements to inspect objects of interest. Remarkably, the eyes remain in motion even in the intervals between voluntary gaze shifts, the so-called “fixation” periods in which visual information is acquired and processed. In these periods, a persistent eye jitter, known as ocular drift, continually perturbs the direction of gaze, moving the projection of the stimulus on the retina across dozens of receptors.

Given the extent of ocular drift and the temporal responses of retinal neurons, it has long been questioned how the visual system manages to avoid perceptual blurring during fixation and establish stable high-acuity representations1,2,3. Multiple theories have been proposed. Some regard the fixational motion of the eye as a challenge to be overcome through specific decoding strategies4. Others argue that eye movements are beneficial for processing spatial information, either by transforming spatial patterns into temporal modulations5,6,7 or by following spatial registration strategies similar to those used in computer vision to enhance image resolution8. Although the proposed theories differ widely in their specific mechanisms, they all share the common assumption that spatial representations at fixation are established solely based on the visual input signals impinging onto the retina, without making use of information from other sources.

This standard assumption, however, contrasts with the multimodal and sensorimotor integration that is known to occur in the presence of larger eye movements, such as the rapid gaze shifts (saccades) and tracking movements (smooth pursuits) that bring and maintain objects onto the fovea. With these movements, interpretation of retinal activity critically depends on motor and proprioceptive knowledge about how the eyes move. Extraretinal signals are known to modulate visual responses by both enhancing and attenuating sensitivity, often in a dynamic manner at specific times during the movements9,10,11,12,13,14,15,16,17. Extraretinal modulations are deemed to be essential for extracting information from the retinal flow18,19, establishing spatial representations20,21,22,23,24, and discarding the motion of the retinal image caused by the eye movements themselves25.

Various factors have contributed to the current tenet that a similar visuomotor integration does not take place during fixation. From a historical perspective, vision science has traditionally approximated the fixational input to the retina as an image, neglecting the incessant motion of the eye and/or assuming this motion to be too small to yield reliable motor or proprioceptive signal. The eyes appear to wander erratically during fixation, leading many researchers to conclude that ocular drift stems from limits in oculomotor control26,27 and is, thus, unlikely to be monitored. Reinforcing this idea, previous attempts to identify extraretinal signals associated with fixational drifts reported negative results28,29, and several studies have argued that retinal signals are solely responsible for establishing stable visual representations during fixation (e.g., ref. 30).

However, contrary to the mainstream assumption, it has long been proposed that ocular drift may actually represent a form of slow control aimed at delivering a desired amount of retinal image motion31,32. This proposal has received renewed support from recent findings, including the observation that drift partly counteracts the physiological instability of the head33, as well as task- and stimulus-dependent changes in drift characteristics34,35,36. Furthermore, previous studies that searched for motor knowledge of fixational drifts either did not control for the spatial information delivered to the retina28 or focused on relatively long temporal windows, intervals over which memory decays could have played a role (e.g., ref. 29). These considerations raise the need for more specific investigations on the mechanisms by which stable high-resolution spatial representations are established during the incessant fixational motion of the eye.

Here we built upon recent advances on high-resolution eye-tracking and gaze-contingent display control, the capability to modify the stimulus in real-time according to the observer’s eye movements, to precisely control retinal stimulation. We developed a spatial discrimination task that cannot be accomplished solely based on the visual input signals to the retina, but rather, depended critically on knowledge of eye position. We show that despite the lack of spatial information in the retinal input, the visual system is capable of reconstructing the configuration of the stimulus, and therefore estimating the fixational motion of the eye, with exquisite sensitivity. These results show that humans possess fine motor knowledge of the way the eye drifts during fixation and integrate this information into high-resolution spatial representations.

Results

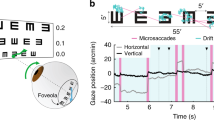

We developed a task that requires motor knowledge of the direction in which the eye moves to be successfully executed. In a forced-choice task, subjects discriminated the spatial configuration of a stimulus that entirely depended on their performed eye movements. They reported whether the bottom bar of a Vernier appeared to be to the right or left of the top bar (Fig. 1A), but, unlike a conventional spatial judgment, the two bars of the Vernier were never visible simultaneously, and no information about their spatial offset was ever delivered to the retina. This was achieved via a gaze-contingent procedure that rendered the stimulus on the display as seen through a retinally-stabilized aperture, a thin slit that moved under real-time computer control together with the eye to restrict stimulation to a narrow vertical strip on the retina centered on the fovea (Fig. 1B). In this way, as the normal fixational motion of the eye swept the aperture across the stimulus, the two bars appeared sequentially at vertically aligned positions on the retina (Fig. 1C), yielding input signals that—under ideal conditions—are not informative for the task (Fig. 1D).

A–C Experimental design. A Subjects reported the spatial configuration of a Vernier (left or right) viewed through a retinally-stabilized aperture. B The aperture moved together with the eye, to allow stimulation of only a thin vertical strip on the retina. The width of the aperture was equal to that of each bar in the Vernier (28\({}^{{\prime} }\) long; 1.4\({}^{{\prime} }\), the angle covered by one pixel on the CRT). C In this way, each Vernier bar was visible only when it directly overlapped with the aperture, resulting in vertically-aligned bar exposures on the retina. D Motor knowledge of eye movements is required to accomplish this task. The same visual input signals can be obtained with different configurations of the stimulus, when the eye drifts in opposite directions. E Example trace of eye movements in a trial. The shaded green regions mark the periods of exposure of each Vernier bars. The pink region indicates the inter-stimulus interval (ISI), here 100 ms. F–I Ocular drift characteristics and performance in the task. Data from N = 6 human observers. F Mean eye speed and displacement are virtually identical to those measured in the same subjects while fixating on a marker. Shaded regions represent ± one SEM across subjects. G Average probability distribution of gaze displacement in between bar exposures. H, I Subjects correctly reported the configuration of the stimulus. Both proportion of correct responses and discriminability index were significantly above chance (H: **p = 3.16 × 10−4; ***p = 3.44 × 10−6; I: **p = 5.02 × 10−4, ***p = 9.16 × 10−6, two-tailed t-test) and improved as the Vernier gap increased (H: *p = 0.0024; I: *p = 0.0016, paired two-tailed t-test). Gray circles are the individual subjects data. Diamonds and associated error bars represent averages ± one SEM across subjects. Source data are provided as a Source data file.

In practice, unbeknownst to the observer, the two bars were displayed one below and one above the position of the center of gaze at two separate times (T1 and T2 in Fig. 1E), the first at a random time from the beginning of the trial, and the second after a fixed delay from the disappearance of the first bar (the inter-stimulus interval, ISI). Subjects moved their eyes normally under these conditions while attempting to maintain fixation at the remembered location of a marker (a 5\({}^{{\prime} }\) dot) briefly presented at the beginning of each trial. They alternated occasional small saccades with periods of ocular drift, which moved the eye in its stereotypical, seemingly erratic fashion with characteristics similar to those measured from the same observers when maintaining fixation on a visible marker (Fig. 1F). In this study we specifically focused on fixational drifts and discarded all trials in which subjects performed saccades or microsaccades. With an ISI of 100 ms, ocular drift resulted in displacements of the line of sight distributed around \(\pm {4}^{{\prime} }\) (Fig. 1G).

Remarkably, subjects were highly proficient in reporting the stimulus configuration, even though its spatial layout was never made explicit on the retina (Fig. 1H, I). Their qualitative experience consisted of two successive flashes with a clear spatial offset. Performance was significantly above chance already at the smallest Vernier offset that could be presented, a gap of only 1.4\({}^{{\prime} }\) corresponding to the spacing of just one single pixel on the display. Performance further increased with larger gaps, with a two-fold increment in d\({}^{{\prime} }\) as the Vernier offset increased to 2.8\({}^{{\prime} }\). These results were highly consistent across individuals: all subjects were able to successfully accomplish the task. In each individual observer, performance was significantly above chance at all Vernier gaps (p < 0.021, one-tailed bootstrap test), with the exception of one subject at the smallest offset (1.4\({}^{{\prime} }\)) for which the d\({}^{{\prime} }\) was close to significance (p = 0.065).

These results were not caused by possible biases—and thereof knowledge—in the individual direction of eye movements, i.e., the realization that perhaps drift was more pronounced in one direction. No obvious directional biases were observed in the recorded data, and horizontal displacements in the two directions were approximately symmetrically distributed (Fig. S1A). Furthermore, performance was high in both the trials in which the eye drifted to the left and to the right (Fig. S1B, C), indicating knowledge of the specific direction of ocular drift in each individual trial. Thus, these data suggest that the visual system has access to high-resolution extraretinal information of how the eye moves during fixation.

Given these unexpected results, we wondered whether our methods of visual stimulation inadvertently introduced spurious spatial cues. Meticulous care had been taken to eliminate all obvious retinal cues that could inform about the stimulus configuration. This included conducting the experiments in complete darkness—while preventing dark adaptation with brief light exposure between block of trials—to avoid visual references; using a fast-phosphor high-speed CRT display to minimize persistence; and lowering the monitor intensity to minimum settings to ensure that the edges of the monitor were not visible. We questioned, however, whether more subtle cues, such as the baseline luminance of the CRT display or possible residual phosphor persistence, played a role by providing unwanted visual references. We also wondered whether the aperture had provided some type of motion signal that could inform about the drift direction. For all these reasons, we repeated the experiment using a custom-built display, an array of 110 × 8 LEDs specifically selected to provide no persistence and no baseline luminance (Fig. 2A). We also made sure to rule out any possible motion signal by exposing each Vernier bar only for a brief interval (5 ms), the shortest detectable exposure allowed by our display.

A A custom LED display developed specifically for this study. This display, an array of 110 × 8 LEDs, each covering 1.9\({}^{{\prime} }\) on the horizontal meridian, was designed to provide no persistence and no background luminance. The insert shows the time-course of activity of one of the LEDs with the brief exposures used in our experiments, measured with a high-speed photocell. B–F Performance measured with 5 ms exposures (N = 7 subjects). Both B proportions of correct responses and C the discriminability index improved as the Vernier gap increased (*p = 7.21 × 10−4 in B and 1.65 × 10−3 in C; paired two-tailed t-tests) and were significantly above chance (B: **p = 4.5 × 10−4, ***p = 5 × 10−5; C: **p = 1.17 × 10−3, ***p = 7.49 × 10−4, two-tailed t-tests). Graphic conventions are as in Fig. 1H, I, with diamonds representing mean values ± SEM across subjects. D Probability of “Right” responses as a function of both the eye displacement in a trial (XE) and the small misalignment on the retina caused by the display resolution (\({X}_{R} \, < \, 1.{9}^{{\prime} }\); one LED, see panel A). Negative and positive XR indicate that, on the retina, the bottom bar was shifted to the left or right, respectively. Each diagonal line represents a Vernier offset X on the display. E Marginal probability of “Right” responses as a function of the eye displacement in a trial for both XR < 0 and XR > 0. The shaded regions represent one SEM. Perceptual reports are influenced by XR (the oscillations in both curves) but primarily driven by XE (the overall trend). F Mean performance ± SEM in the trials in which XE and XR possessed opposite signs. Subjects successfully completed the task even when XR predicted the wrong response (*p = 0.0297 and **p = 3.54 × 10−4 above chance; two-tailed t-test). Source data are provided as a Source data file.

Comparison between the data in Fig. 2B, C and Fig. 1H, I show that results were little affected by these changes in visual stimulation. The drift behavior changed little from the previous experiment and remained practically identical to that observed during fixation on a visible marker (Fig. S2). Critically, subjects continued to correctly report the stimulus even under these more stringent conditions: performance was already above chance at the smallest possible Vernier offset (in this case 1.9\({}^{{\prime} }\), the width of one LED) and further improved as the distance between the two bars increased. These effects were clearly visible in the data from each observer, all of whom individually exhibited above chance performance at all Vernier offsets presented (p < 0.011, bootstrap test).

These findings were very robust. As in the experiment of Fig. 1, performance was similar for leftward and rightward ocular drift (Fig. S1D–F), showing access to the specific drift trajectory performed in each trial, rather than knowledge of possible directional biases. Results were also not caused by possible inaccuracies in measuring eye movements. In this regard, it is important to notice that the experiments relied on the relative alignment of the two bars on the retina, not their absolute positions. That is, conclusions do not depend on the accuracy of gaze localization—a notoriously difficult operation—but on the capability to measure changes in gaze position, something that a properly tuned and calibrated DPI eye-tracker accomplishes with sub-arcminute resolution37. Monte Carlo simulations show that eye movements would need to be overestimated by an unrealistic amount, over 100%, to account for our findings (Fig. S3). This degree of imprecision is not plausible with our recording apparatus.

Furthermore, analysis of residual errors in the alignment of retinal stimuli revealed that these cues cannot account for the experimental data. To be perfectly aligned on the retina, each bar needs to be rendered exactly at the current location of gaze. In practice, however, the precision of this operation is limited by the resolution of the display, as the stimulus can only be shown at the closest pixel/LED location, resulting in a small offset (XR in Fig. 2A). This misalignment did exert a perceptual influence. For each Vernier offset X on the display (diagonal lines in Fig. 2D), perceptual reports exhibited a subtle but systematic influence from XR: the probability of reporting the bottom bar to the right was slightly larger when the misalignment was consistent with this interpretation (XR > 0) than when it was in the opposite direction (XR < 0; diagonal arrow in Fig. 2D). However, this cue could not possibly account for the general pattern of results obtained as X varied. Its influence was small relative to that exerted by the gaze displacement XE (horizontal arrow in Fig. 2D), and overall, perceptual reports were driven by XE irrespective of XR (Fig. 2E). In fact, XR was overall poorly correlated with subject responses (average correlation coefficient across observers: ρ = −0.016 ± 0.093), and subjects were able to successfully accomplish the task even in the trials in which the misalignment indicated the wrong response, the trials in which the XR was in the opposite direction of the Vernier offset on the display (Fig. 2F). All these analyses further support the conclusion that humans incorporate fine oculomotor knowledge in the establishment of spatial representations.

The small stimulus offsets caused by the display resolution provide an opportunity to examine how the visual system integrates retinal and extraretinal signals at fixation. To gain insight into this process, we compared the perceptual reports recorded in the experiments to the responses of an ideal observer that inferred the most likely configuration of the stimulus from sensory measurements of both the eye displacement and the retinal misalignment. The ideal observer assumes uncertainty in sensory signals (modeled as additive Gaussian noise) and possesses only general knowledge about eye movements. Specifically, it assumes that ocular drift evolves as Brownian motion and, therefore, the variance of the probability of gaze displacement increases proportionally to time38,39. For each individual observer, the diffusion constant of this motion was directly estimated from their eye movements. In each trial, the model weighted the measured probability of eye displacement by its prior and estimated the most likely configuration of the stimulus (“Left” or “Right”) by comparing the overall probability (the integral of the 2D posterior probability distribution) on the two sides of the zero displacement line (diagonal cyan line in Fig. 3A).

A An ideal observer model that combines retinal (xR) and extraretinal (xE) signals. The model assumes sensory measurements to be corrupted by unbiased additive Gaussian noise (standard deviations σR and σE) and applies a uniform prior to xR and a zero-mean Gaussian prior to xE (\(\sigma=\sqrt{2DT}\), where T is the ISI), the latter based on the assumption that ocular drift resembles Brownian motion. In each trial, the likelihood of any given combination (XE, XR) is first converted into a joint posterior probability distribution and then integrated on the −45∘ line xE + xR = X (dashed line) to evaluate the probability of any given Vernier offset X. The left/right perceptual report in a trial is determined by which side of the zero-offset line (cyan line) gives higher probability. B–D Response patterns are best predicted by the model when combining both cues (B); the model (C) that only uses extraretinal information does not account for the dependence on XR, and the model (D) that uses only retinal information fits poorly. Graphic conventions are as in Fig. 2D. E, F Comparison of experimental data and model predictions of responses and d\({}^{{\prime} }\): E Probability of responding “Right” for various combinations of XR and XE (the same data points as in B–D). F Average performance measured as d\({}^{{\prime} }\) (N = 7 subjects). Error bars represent ± one SEM across subjects. The cue integration model predicts both subject responses and overall performance with significantly greater accuracy than the single-cue models. Source data are provided as a Source data file.

The ideal observer closely replicated the way subject’s responses varied as a function of XE and XR (cf. Fig. 3B and Fig. 2D). As in the empirical data, the overall pattern of response was primarily driven by the eye displacement, but a dependence on retinal misalignment was also visible for each gap of the Vernier on the display. Across all data points, the model accounted for 93% of the variance in subject’s responses (green dots in Fig. 3E) and accurately predicted the d\({}^{{\prime} }\) observed in the experiments (green line Fig. 3F; individual data in Fig. S4A). Critically, both motor information about drift displacement and retinal information about bars alignment were necessary to replicate experimental data. Discarding the retinal signal led to a reduction in performance, but the model was still able to account for about 56% of the variance in perceptual reports. In contrast, performance dropped to chance level and the model could only account for 12% of the variance following elimination of extraretinal information (Fig. 3E, F; see also log-likelihood data in Fig. S4A). Thus, subjects performed very similarly to the predictions of a Bayesian combination of retinal and extraretinal sensory signals, with a predominant influence exerted by motor knowledge of eye movements.

The previous results indicate that motor knowledge of eye drifts during fixation is incorporated into spatial judgements. To gain insight into the mechanisms responsible for monitoring gaze position at this level of resolution, we examined the temporal course of this process. Specifically, as illustrated in Fig. 4A, we searched for the interval W over which the gaze displacement ΔX best correlated with the subject’s responses. To this end, we systematically varied both the duration of the window of observation (TW) and its timing (ΔtW) measured as the lag between the onset of the first bar and the window center.

A Determination of the horizontal gaze displacement \(\Delta X=X(\Delta {t}_{W}+\frac{{T}_{W}}{2})-X(\Delta {t}_{W}-\frac{{T}_{W}}{2})\) that best correlates with perceptual reports. TW represents the duration of the window of observation, ΔtW the temporal lag between the onset of the first bar and the window center. B–F Results obtained with a 100 ms ISI (panels B, C, N = 7 subjects) and with a 500 ms ISI (panels D–F, N = 3 subjects). Data were collected using the custom LED display with 5 ms flashes. B, D Correlation between ΔX and perceptual reports as a function of both window parameters (TW; vertical axis) and (ΔtW; horizontal axis). The highest correlation is achieved for a 100 ms window that slightly precedes the first bar. Side plots are sections at the optimal TW and ΔtW (red dashed lines). Shaded regions represent ± one SEM across subjects. C The timing of maximum correlation for each individual subject. On average, subject responses are best correlated with a 100-ms window that anticipated the stimulus by 13 ms (filled black circle; *p = 1.52 × 10−4, two-tailed t-test). Error bars represent ± one SD. E Comparison of performance with 100 and 500 ms ISIs. Performance was lower in the 500 ms condition (**p < 0.005, paired one-tailed t-test) and improved marginally with increasing Vernier offset (***above chance; p < 0.009, one-tailed t-test). Empirical data are consistent with the prediction from the ideal observer model with σE adjusted to increase proportionally to time (red curve) and lower than predicted by increasing \({\sigma }_{E}\propto \sqrt{t}\) (yellow curve; *p < 0.037, one-tailed paired t-test). Note that these fits have no free parameters: all parameters were obtained from those estimated over the 100 ms ISI in Fig. 3. Error bars and shaded regions represent ± one SEM. F For each observer (different colors) performance at both 1.9\({}^{{\prime} }\) and 3.8\({}^{{\prime} }\) gaps was always lower in the 500 ms condition (p < 0.027, one-tailed bootstraps over an average of N = 87 trials across subjects and gaps). Error bars represent ± one SEM. Source data are provided as a Source data file.

Figure 4B shows the average correlation between gaze shifts and perceptual reports as a function of both position (horizontal axis) and duration (vertical axis) of the window of observation. As shown by these data, the correlation peaked for a short window of approximately 100 ms that slightly preceded the bar presentations. This finding was highly consistent across individual observers, all of whom exhibited a similar timing, resulting in a statistically significant anticipation of the onset of the window of observation relative to the onset of the first Vernier bar (−13 ms on average; Fig. 4C). Thus, during fixation, retinal signals appear to be combined with motor estimation of gaze position that slightly precedes retinal exposures, suggesting a predictive use of extraretinal signals.

Given that the duration of the optimal window in Fig. 4 was similar to the interval between bar exposures (100 ms), we wondered whether this window indicates continuous oculomotor monitoring throughout the ISI or represents a fixed internal temporal scale over which drift is estimated. Both possibilities can be mediated by a mechanism of integration of noisy velocity signals, a process similar to the one believed to occur for smooth pursuit40,41,42. However, these two hypotheses lead to distinct predictions as the ISI is further increased. If drift displacement is integrated across the entire interval between bar exposures, we would expect the uncertainty in the extraretinal measurement of displacement (i.e., its standard deviation σE) to increase no faster than \(\sqrt{t}\), as accumulation of temporally uncorrelated noise progressively disrupts the position estimate. In contrast, if gaze displacement is estimated from the movement measured over a shorter interval of the ISI, we would expect σE to increase proportionally to t, as the consequence of a temporal extrapolation process.

To address this question, we repeated the experiment while increasing the ISI between the bar exposures by a factor of five, to 500 ms. Except for this longer interval, the paradigm was otherwise identical to that of Fig. 2, with 5 ms exposures delivered by our custom LED display. Increasing the ISI profoundly affected performance. Proportion of correct responses at the smallest Vernier offsets dropped drastically and were at now chance level with a 1.9\({}^{{\prime} }\) gap. Furthermore, even at the much larger Vernier offsets resulting from the longer ISI, performance remained considerably lower than the levels measured in the 100 ms ISI condition (Fig. 4E). Results were highly consistent across subjects, all of whom exhibited substantial and significant reductions in performance in the 500 ms condition (Fig. 4F).

These data closely matched the predictions of our ideal observer model under the “extrapolation” hypothesis, i.e., when the uncertainty in the extraretinal measurement for the 500-ms ISI was assumed to be five times larger than for the 100-ms ISI (σE(500) = 5 σE(100); red curve in Fig. 4E). In contrast, model predictions fell far from the data under the “integration” hypothesis, i.e., when extraretinal uncertainty was increased proportionally to the squared root of time (\({\sigma }_{E}(500)=\sqrt{5}\ {\sigma }_{E}(100)\); yellow curve in Fig. 4E). In this case, the model significantly overestimated performance in all observers (Fig. S5). In keeping with these data, the duration of the temporal window over which gaze displacement best correlated with perceptual reports did not increase, but remained similar to that observed for the shorter ISI (Fig. 4D).

These findings are not compatible with continuous monitoring of eye position throughout the ISI. They suggest that extraretinal estimation of ocular drift is conducted over a short window of approximately 100 ms duration. When asked to estimate ocular displacement over a longer interval, subjects recur to a process of extrapolation, presumably because this is the only possible strategy under our experimental conditions.

Discussion

The eyes drift incessantly in the intervals between saccades, even when attending to a single point, raising fundamental questions on how the visual system avoids perceptual blurring, resolves fine detail, and establishes stable high-acuity spatial representations. Existing theories assume these processes to rely exclusively on the output signals from the retina4,8,43. Contrary to this idea, our results show that the human visual system has access to high-resolution motor knowledge about eye movements and integrate this information with signals from the retina to estimate fine spatial relations. These findings challenge the standard view of passive processing of a retinal image during fixation and indicate that the computations responsible for representing visual space are intrinsically sensorimotor.

To unveil extraretinal contributions, our study relied on methods for gaze-contingent display control, the updating of the stimulus according to the observer’s eye movements. This approach enables both precise control of visual input signals and manipulation of visuomotor contingencies. Specifically, in our experiments, we tailored the visual input to create a stimulus configuration on the display that conveyed no spatial information on the retina. Our data show that even under these stringent conditions, humans retain sufficient knowledge of their oculomotor activity to reconstruct the direction in which gaze drifts over a short interval. This knowledge is specific, enabling detection of gaze displacements with arcminute resolution. Furthermore, this oculomotor signal is evaluated in the light of general knowledge of eye drift statistics, so that spatial judgements closely follow the predictions of an ideal observer that assigns uncertainty to the estimated spatial representation (the addition of retinal and extraretinal information) based on the reliability of the extraretinal signal.

Our results also indicate that the extraretinal signal is continually estimated over a short temporal interval of approximately 100 ms. This interval systematically precedes visual stimulation by more than 10 ms, likely yielding an even larger lag once the response delays of visual neurons are taken into account. The duration of the window of integration appears inflexible, forcing subjects in our experiment with a long ISI to infer gaze displacement via extrapolation (Fig. 4E). This strategy likely represents an adaptation to the unnatural conditions of our experiments, where visual information is not continuously present. It is remarkable that the duration of this window approximately matches the interval of integration of neurons in the early stages of the visual system44, a matching that may have important computational consequences. Note, however, that a persistent trace of visual stimulation may not be necessary for the extraretinal signal to exert its effect, as suggested by the above chance performance measured with the 500 ms ISI (Fig. 4E).

It is important to emphasize that our findings cannot plausibly be explained by inaccurate positioning of stimuli on the retina nor inaccuracies in eye-tracking. Extensive care was taken to eliminate all informative spatial cues in the retinal input and ensure that results were not contaminated by corollary discharges associated to saccades, microsaccades, or other types of smooth eye movements. Our analyses confirm that our apparatus is highly precise, as we can reliably measure the perceptual consequence of the stimulus misalignment caused from display resolution, the tiny mismatch between the measured gaze position and the actual position of the stimulus on the display. While this retinal cue exerts a clear influence at every presented Vernier offset, it cannot account for perceptual reports, as performance in the task varied primarily with the measured gaze displacement. Modeling of eye-tracking errors showed that gaze displacement would need to be overestimated by an unrealistic amount to yield retinal cues that could account for our results.

Furthermore, the stimulus duration was too short—more than one order of magnitude45,46—to provide useful motion signals. Our custom display was designed to switch on/off within tens of microseconds (Fig. 2A), and the median displacement of the stimulus on the retina during the resulting brief exposures was only ~14 arcseconds, well below the thresholds reported in the literature for similar tasks47. Results did not change when selecting only the trials with minimal displacement during exposure, and the instantaneous velocities measured around the times of bar exposures were only weakly correlated with perceptual reports (Fig. 4B). All these observations indicate that retinal image motion played no role in our experiments.

At first sight, the finding that eye drift is monitored appears to contradict widespread assumptions in the field. An obvious conflict is with the notion that drift is not controlled—the popular idea that this motion results from noise at the neural and/or muscular level26,48. Although less known, however, it has long been proposed that the smooth fixational motion of the eye actually represents a form of slow control, a sort of pursuit of a stationary target aimed at maintaining ideal visual conditions31,32,49 and eliciting neural responses10,50. This view has received strong support in the recent literature. It is now known that during natural fixation, when the head is free to move normally, ocular drift partially compensates for the physiological instability of the head, severely constraining retinal image motion33,51. Furthermore, changes in the characteristics of ocular drift have been observed in high-acuity tasks, as when looking at a 20/20 line of an eye-chart or when judging the expression of a distant face34,52. These changes appear to be functional, as they increase the power of the luminance modulations impinging onto retinal receptors, an effect consistent with theories arguing for temporal representations of fine spatial details6,7. The present study goes beyond this previous body of work by showing that the signals involved in exerting control at this scale also contribute extraretinal information that is integrated in spatial representations.

Our conclusions also appear to contrast with those reached by previous studies with similar paradigms. Classical experiments with asynchronously displayed Verniers concluded that drift is not monitored because performance declines with increasing delays between exposures28,53. Figure 4 replicates this effect, but our data show that other factors (e.g., memory decays and/or the window over which drift is monitored) must be responsible for the measured decrement in performance. More recently, support to the notion that fixational drift is not monitored has come from systematic localization errors observed with stimuli briefly displayed in complete darkness. When reporting the position of a previously displayed reference by selecting between two probes, one at the same reference’s location on the display (spatiotopic probe) and one at its same position on the retina (retinotopic probe), subjects systematically select the retinotopic one29. These errors are, in fact, predicted by our ideal observer model, but attributed to the specific perceptual choice presented to the observer rather than lack of extraretinal knowledge of eye drift (see Fig. S7). Thus, the present study suggests alternative explanations for the previous reports in the literature.

Our findings lead to a critical question: why are eye movements monitored at such high level of resolution? There are several complementary ways in which an extraretinal drift signal could contribute to visual processing. A possibility is by facilitating visual stability during fixation, i.e., by helping disentangling the visual motion signals resulting from external objects from those generated by eye movements. Studies on how the visual system discards motion signals resulting from egomotion have primarily focused on larger eye movements, saccades, and smooth pursuits23,24,25,54,55, often in the context of the establishment of spatiotopic representations56,57,58. These studies have emphasized the interaction between retinal and extraretinal signals, both efference copies of motor commands59 and proprioceptive information from extraocular muscles60. The eye drift that occurs during fixation is commonly assumed to be too small for extraretinal compensation, and early suggestions that the receptive fields of neurons in the primary visual cortex counteract this motion61 were not supported by later experimental measurements62. Thus, the resulting visual motion signals are believed to be perceptually canceled solely on the basis of the retinal input29,30.

This idea, however, is at odds with the motion perceived during exposure to retinally-stabilized objects, stimuli that move with the eye to remain immobile on the retina29,63. Furthermore, it has been observed that motion perception is biased to the direction of eye movements, so that stimuli that move opposite to ocular drift on the retina tend to appear stable even if their motion is amplified63,64. Such bias requires knowledge of drift direction, information that could be provided by the extraretinal signal uncovered in our experiments. There are several ways in which extraretinal knowledge of ocular drift can help improving perceptual stability. One possibility is that the visual system estimates drift motion as the lowest instantaneous velocity on the retina that is also congruent with drift direction, a view that would explain not only the perceived motion with stabilized images and the directional anisotropy in motion perception, but also the perceived jittery motion of a stationary stimulus following adaptation to dynamic noise patterns30. This approach is similar to the one proposed to explain jitter after-effects, but differs from a purely retinal cancellation mechanism for also requiring directional consistency with extraretinal measurements.

Our findings also suggest another way in which extraretinal drift information could contribute to visual perception, which is by directly participating in the establishment of high-acuity spatial representations. In our experiments, observers were able to infer geometrical arrangements purely based on extraretinal information. Until now, spatial information during fixation has been assumed to be extracted solely from the responses of retinal neurons4,8,43,65. While several methods have been proposed for registering afferent visual information into spatial maps as the eye drifts, all these methods exclusively rely on the retinal input. However, this process presumably depends on the richness of visual stimulation and requires temporal accumulation of evidence, difficulties that an extraretinal drift signal could alleviate. Thus, motor knowledge of ocular drift may be particularly valuable when visual stimulation is sparse and following saccades, when new visual content is introduced on the retina. Interestingly, an extraretinal contribution makes this process similar to the coordinate transformation underlying the establishment of head-centered spatial representations during larger eye movements56,66,67,68,69, emphasizing a general computational strategy and supporting a similarity between fixational drift and pursuit movements32. Further work is needed to assess the origins of the extraretinal signal unveiled by our experiments and its specific role in representing space.

Methods

Subjects

A total of 13 subjects (5 males and 8 females; age range: 20–35), all naïve about the purpose of the study, participated in the experiments. All subjects were emmetropic, with at least 20/20 visual acuity in the right eye as measured by a Snellen eye chart, and were compensated for their participation. Informed consent was obtained from all participants following the procedures approved by Institutional Review Boards at Boston University and the University of Rochester.

Stimuli

Stimuli consisted of standard Verniers, with two vertical bars separated by a horizontal gap (the Vernier offset; Fig. 1A). The two bars were never simultaneously visible: they were exposed at different times at the current location of the line of sight on the display, so that the offset was determined by the gaze displacement that occurred in between the two exposures. In this way, the bars always appeared vertically aligned on the retina, whereas the gap on the display varied across trials based on the eye movements performed by the observer.

In the experiment of Fig. 1 (Experiment 1), each bar was 28\({}^{{\prime} }\) long and \(1.{4}^{{\prime} }\) wide and exposed at luminance of 14.2 cd/m2. Bars were \(1{9}^{{\prime} }\times 1.{9}^{{\prime} }\) and possessed luminance of 49.6 cd/m2 in the experiments of Figs. 2 and 4 (Experiments 2 and 3). These dimensions were the outcome of adjusting the distance of the display so that each bar could be as thin as possible (one pixel wide in Experiment 1 and one LED wide in Experiments 2–3), while at the same time retaining clear visibility when briefly exposed at maximum intensity. Stimuli were examined in total darkness, carefully removing all light sources that could serve as potential spatial references and all visual cues that could provide information about the Vernier configuration.

Apparatus

Stimuli were rendered by means of EyeRIS, a hardware/software system for real-time gaze-contingent display that enables precise synchronization between eye movement data and the refresh of the display70. They were viewed monocularly with the right eye, while the left eye was patched. A dental imprint bite-bar and a headrest minimized head movements and maintained the observer at a fixed distance from the monitor.

Different displays were used in Experiment 1 and in Experiments 2–3. In Experiment 1, stimuli were rendered on a fast-phosphor CRT monitor (Iiyama HM204DT) at a resolution of 800 × 600 pixels and 200 Hz refresh rate. This monitor has fast phosphors with decay time shorter than 2 ms. A completely dark background and tuning of the monitor at minimum settings ensured that the edges of the display were never visible.

To further control for possible influences from phosphor persistence, residual background luminance, and retinal image motion, in Experiments 2–3 stimuli were displayed on a custom LED display specifically developed for this study (Fig. 2A). LEDs are not affected by lingering activity like phosphors and have zero baseline illumination when not active. The custom display consisted of 880 LEDs, 800 rectangular elements arranged into two rows of 4 LED, and a 3 × 3 array of circular LED used for eye-tracking calibration. Each Vernier bar was given by the simultaneous activation of a column of 4 LED in either the top or the bottom row. This display also offered lower latency relative to a CRT (3 ms vs. 7.5 ms, on average) and more precise timing, since each LED could be controlled independently without having to wait for the rasterization of a frame to be completed, as in a CRT. LED activation triggered a digital signal that was sampled synchronously with oculomotor data, so that the timing of stimulus presentation could be reconstructed offline with high precision.

To measure eye movements with the precision necessary to align stimuli on the retina, we used a dual Purkinje Image (DPI) eye-tracker (Fourward Technology), an analog system with high spatiotemporal resolution and minimal delay. This specific eye-tracker has been customized over the course of two decades to refine its dynamics and minimize sources of noise. It resolves movements smaller than 1\({}^{{\prime} }\) as tested with an artificial eye controlled by a galvanometer. Analog eye movements data were first low-pass filtered at 500 Hz, then sampled at 1 kHz, and recorded for off-line analysis. Note that since the DPI directly estimates gaze position, measurement errors do not accumulate over time. That is, measurements of similar displacements estimated over different ISIs, like in Fig. 4E, are expected to possess similar accuracy.

Experimental procedures

Data were collected in multiple experimental sessions, each lasting approximately 1 h. Each session consisted of several blocks of trials, with each block containing approximately 50 trials. Every block started with preparatory procedures to ensure optimal eye-tracking. These steps included positioning the subject in the apparatus, tuning the eye-tracker, and performing calibration procedures to accurately localize gaze. Frequent breaks between blocks allowed the subject to rest. Lights were turned on during these breaks to prevent dark adaptation and minimize visibility of the edges of the CRT as well as the influence of any possible residual light.

Subjects were told that the two bars of a Vernier would be presented sequentially in random order and were asked to report whether the bottom bar was to the left/right or to the top bar by pressing a corresponding button on a joypad. Each trial started with the subject fixating on a 5\({}^{{\prime} }\) red dot at the center of the display for 1 s. The fixation marker was then turned off, and after a uniformly-distributed random delay of 1–2 s, the first Vernier bar was exposed either above or below the current gaze position (equal probability across trials). The second bar then followed with a fixed delay (the inter-stimulus interval, ISI) at the current gaze location. In this way, the two Vernier bars were aligned on the retina and separated on the display by the gaze shift that occurred during the ISI (both horizontal and vertical displacements in Experiment 1; only horizontal displacement in Experiments 2 and 3). The ISI was 100 ms in Experiments 1 and 2 and 500 ms in Experiment 3.

Slightly different procedures were adopted in Experiments 1 and 2–3. In Experiment 1, the image was continually updated on the CRT display to replicate the visual consequences of viewing stimuli through a thin slit aperture that moved with gaze (i.e., a retinally-stabilized aperture; Fig. 1C, D). This implied that the stimulus exposure varied across trials, as each bar remained visible as long as it was aligned with the aperture. One bar was displayed in the top half of the aperture, and one in the bottom half. In Experiments 2 and 3, to eliminate possible motion signals, each Vernier bar was only displayed for 5 ms, the shortest exposure at the maximum intensity afforded by our LED display. In every trial, two columns of LED were activated, one in the top and one in the bottom row of the display. Columns were selected as the ones closest to the horizontal gaze position measured at the time of exposure. Except for these points, the paradigm was otherwise identical in the two experiments.

Data analysis

Oculomotor data

Periods of blinks and poor tracking were automatically detected by the DPI eye-tracker. Only trials with optimal, uninterrupted eye-tracking and no blinks were selected for data analysis. Recorded oculomotor traces were first automatically segmented into separate periods of drift and saccades based on speed threshold of 3∘/s and validated by human experts. Segmentation based on eye speed is very accurate with the high-quality data provided by the DPI during head immobilization. In this study, we specifically focus on ocular drift. All trials that contained other types of eye movements besides ocular drift, like saccades and microsaccades, were excluded from data analysis.

Evaluation of performance

At every Vernier offset, performance was quantified by means of both proportion correct and d\({}^{{\prime} }\). For each individual observer, we used bootstrap to evaluate statistical significance across conditions and differences from chance levels (Figs. S4 and S5). The data reported in Figs. 1–4 are averages across observers and corresponding statistics.

In Fig. 2, performance is also examined as a function of both the horizontal eye displacement (XE) and the estimated misalignment of the two bars on the retina (\({X}_{R} \, < \, {2}^{{\prime} }\)). Ideally, the two Vernier bars need to be perfectly aligned. However, each bar could only be displayed at the pixel/LED closest to the estimated gaze position, so that the Vernier offset X on the display was not XE, but equal to XE + XR. We assessed the joint influence of XE and XR by binning trials according to their values to uniformly sample the space and examined how perceptual reports varied across bins. In the space (XE, XR), a Vernier offset X on the display corresponds to a −45∘ tilted line, as the same X could be reached with various cue combinations (XE + XR = X). The 5 lines in Fig. 2D correspond to the 5 Vernier offsets reached in the experiment (\({0}^{{\prime} }\), \(\pm 1.{9}^{{\prime} }\), \(\pm 3.{8}^{{\prime} }\)). The data in Fig. 2D represent averages obtained by pooling data across subjects, so that each bin contained on average 60 trials.

In Fig. 4B, D, the correlation between gaze displacement and perceptual reports was examined as a function of both lag ΔtW and duration TW of the temporal window of observation. To this end, we first converted subject’s responses into a binary format (−1 and 1) and then computed the Pearson correlation coefficient with the horizontal displacement in the interval [\(\Delta {t}_{W}-\frac{{T}_{W}}{2}\), \(\Delta {t}_{W}+\frac{{T}_{W}}{2}\)]. Highly similar results were obtained over sets of trials collected early or late in the experiments, suggesting little influence from training (Fig. S6).

Ideal observer model

To gain insight into the mechanisms by which extraretinal estimation of ocular drift contributes to representing space, we compared the perceptual reports measured in the experiments to those of an ideal observer that adds noisy sensory measurements of spatial cues on the retina and eye movements (XR and XE) to establish head-centered representations. The ideal observer assumes ocular drift to resemble Brownian motion with a specific diffusion rate. This assumption is incorporated in the joint prior distribution p(XR, XE), which is uniform along xR and follows a Gaussian distribution with zero mean and standard deviation \(\sqrt{2DT}\) along xE, where D is the diffusion coefficient of the individual’s drift process and T the ISI. In a Brownian process the variance evolves proportionally to time. For each subject, we estimated D from the recorded eye traces via linear regression of the variance of the gaze displacement over the considered ISI. Sensory measurements of XE and XR were assumed to be corrupted by independent additive white noise processes with Gaussian distributions: p(xR∣XR) = N(XR, σR) and p(xE∣XE) = N(XE, σE).

In every trial, the ideal observer estimates the joint posterior probability of the retinal and extraretinal displacement:

Thus, \(p({\widehat{X}}_{E},{\widehat{X}}_{R})\) is a two-dimensional Gaussian with mean and covariance given by:

The probability of any given Vernier offset X, \(p(\widehat{X})\), can then be estimated by integrating the joint posterior probability \(p({\hat{X}}_{E},{\hat{X}}_{R})\) along the line XE + XR = X (Fig. 3A):

The probabilities of reporting the bottom bar of the Vernier to the left or to the right of the top bar are then given by \({{{{{{{\rm{P}}}}}}}}(\widehat{X} \, < \, 0)\) and \({{{{{{{\rm{P}}}}}}}}(\widehat{X} \, > \, 0)\), respectively.

The two free parameters of the model, σE and σR, determine the uncertainty of sensory measurements. The larger is σE, the weaker is its perceptual influence, with no trial-specific extraretinal knowledge of ocular drift in the limit case of σE = ∞. These parameters were estimated individually for each subject to maximize the log-likelihood (\(L={\sum }_{i}\log {P}_{i}\)) of the model replicating the subject’s perceptual reports across all trials:

where Pi represents the probability that the model responds in the same way as the observer in trial i: \({P}_{i}={{{{{{{\rm{P}}}}}}}}(\widehat{X} \, < \, 0)\) if the subject responded “Left” and \({P}_{i}={{{{{{{\rm{P}}}}}}}}(\widehat{X} \, > \, 0)\) if he/she responded “Right”.

Evaluation of model performance

We evaluated the model in several ways. The data in Fig. 3F compare the overall performance measured in the experiments to that predicted by the model. Predictions were first obtained for each individual observer (Fig. S4A) and then averaged across subjects in Fig. 3. The log-likelihood L by which the model accounts for subject’s perceptual responses is reported in Fig. S4A. We also examined the model’s capability to reproduce the pattern of perceptual responses as a function of the measured retinal and extraretinal cues. Fig. 3B compares the output of the model to perceptual reports for each of the groups of trials of Fig. 2D. The overall accuracy of the model is summarized by the coefficient of determination R2 in Fig. 3E.

Furthermore, in Fig. 3, we compared both performance and perceptual reports to an ideal observer that operates on just one of the two cues, either XE or XR. In this case, parameters were optimized with the model reduced to estimating the Vernier offset from the marginal posterior probability along the considered axis:

or

where parameters were obtained via the same maximum likelihood procedure used for the full model.

Dynamics of drift estimation

Distinct predictions emerge if gaze displacement is estimated over the entire interval between bar exposures or by extrapolating measurements obtained over a shorter interval. In the former case, the error in estimating gaze displacement will progressively accumulate because of the noise in the measurement. Specifically, the standard deviation of the estimate will grow as \({\sigma }_{E}\propto \sqrt{t}\) under the assumption of temporally uncorrelated sensory noise. In contrast, if drift is estimated over an interval shorter than the ISI, we would expect the displacement error to grow proportionally to time as a consequence of extrapolation: σE ∝ t.

In Fig. 4C, we tested which of these two alternative hypotheses best fit the data when the ISI, T, was increased from 100 ms to 500 ms. In the 500 ms condition, the standard deviation of the prior was correspondingly increased by a factor of \(\sqrt{5}\) to reflect the five-fold increment in the interval between bar exposures, as dictated by the assumption that ocular drift resembles Brownian motion. The uncertainty in the retinal signal (σR) remained the same as in the 100 ms condition. The uncertainty in the extraretinal cue (σE) was either enlarged by a factor of a \(\sqrt{5}\) or 5 as suggested by the two hypotheses. Individual subjects data and model predictions are reported in Fig. S5.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

Data are available from the Harvard Dataverse at https://doi.org/10.7910/DVN/FYKP2L. Source data are provided with this paper.

Code availability

The Matlab code for analyzing the data and generating the figures is available at https://doi.org/10.5281/zenodo.7433824.

References

Ratliff, F. & Riggs, L. A. Involuntary motions of the eye during monocular fixation. J. Exp. Psychol. 40, 687–701 (1950).

Ditchburn, R. W. Eye movements in relation to retinal action. Opt. Acta 1, 171–176 (1955).

Steinman, R. M., Levinson, J. Z., Collewijn, H. & Van der Steen, J. Vision in the presence of known natural retinal image motion. J. Opt. Soc. Am. A 2, 226–233 (1985).

Burak, Y., Rokni, U., Meister, M. & Sompolinsky, H. Bayesian model of dynamic image stabilization in the visual system. Proc. Natl Acad. Sci. USA 107, 19525–19530 (2010).

Marshall, W. H. & Talbot, S. A. in Biological Symposia—Visual Mechanisms (ed. Kluver, H.) Vol. 7, 117–164 (Cattel, 1942).

Ahissar, E. & Arieli, A. Figuring space by time. Neuron 32, 185–201 (2001).

Rucci, M., Ahissar, E. & Burr, D. Temporal coding of visual space. Trends Cogn. Sci. 22, 883–895 (2018).

Anderson, A. G., Ratnam, K., Roorda, A. & Olshausen, B. A. High-acuity vision from retinal image motion. J. Vis. 20, 1–19 (2020).

Reppas, J. B., Usrey, W. M. & Reid, R. C. Saccadic eye movements modulate visual responses in the lateral geniculate nucleus. Neuron 35, 961–974 (2002).

Kagan, I., Gur, M. & Snodderly, D. M. Saccades and drifts differentially modulate neuronal activity in V1: effects of retinal image motion, position, and extraretinal influences. J. Vis. 8, 1–25 (2008).

Sommer, M. A. & Wurtz, R. H. Brain circuits for the internal monitoring of movements. Annu. Rev. Neurosci. 31, 317–338 (2008).

Ibbotson, M. & Krekelberg, B. Visual perception and saccadic eye movements. Curr. Opin. Neurobiol. 21, 553–558 (2011).

McFarland, J. M., Bondy, A. G., Saunders, R. C., Cumming, B. G. & Butts, D. A. Saccadic modulation of stimulus processing in primary visual cortex. Nat. Commun. 6, 1–14 (2015).

Li, H. H., Barbot, A. & Carrasco, M. Saccade preparation reshapes sensory tuning. Curr. Biol. 26, 1564–1570 (2016).

Benedetto, A. & Morrone, M. C. Saccadic suppression is embedded within extended oscillatory modulation of sensitivity. J. Neurosci. 37, 3661–3670 (2017).

Intoy, J., Mostofi, N. & Rucci, M. Fast and nonuniform dynamics of perisaccadic vision in the central fovea. Proc. Natl Acad. Sci. USA 118, 1–9 (2021).

Kroell, L. M. & Rolfs, M. The peripheral sensitivity profile at the saccade target reshapes during saccade preparation. Cortex 139, 12–26 (2021).

Nawrot, M. Eye movements provide the extra-retinal signal required for the perception of depth from motion parallax. Vis. Res. 43, 1553–1562 (2003).

Nadler, J. W., Nawrot, M., Angelaki, D. E. & DeAngelis, G. C. MT neurons combine visual motion with a smooth eye movement signal to code depth-sign from motion parallax. Neuron 63, 523–532 (2009).

Lappe, M., Bremmer, F. & Van den Berg, A. V. Perception of self-motion from visual flow. Trends Cogn. Sci. 3, 329–336 (1999).

Rolfs, M., Jonikaitis, D., Deubel, H. & Cavanagh, P. Predictive remapping of attention across eye movements. Nat. Neurosci. 14, 252–256 (2011).

Poletti, M., Burr, D. C. & Rucci, M. Optimal multimodal integration in spatial localization. J. Neurosci. 33, 14259–14268 (2013).

Sun, L. D. & Goldberg, M. E. Corollary discharge and oculomotor proprioception: cortical mechanisms for spatially accurate vision. Annu. Rev. Vis. Sci. 2, 61–84 (2016).

Wurtz, R. H. Corollary discharge contributions to perceptual continuity across saccades. Annu. Rev. Vis. Sci. 4, 215–237 (2018).

Binda, P. & Morrone, M. C. Vision during saccadic eye movements. Annu. Rev. Vis. Sci. 4, 193–213 (2018).

Cornsweet, T. N. Determination of the stimuli for involuntary drifts and saccadic eye movements. J. Opt. Soc. Am. 46, 987–993 (1956).

Fiorentini, A. & Ercoles, A. M. Involuntary eye movements during attempted monocular fixation. Atti. Fond. Giorgio Ronchi 21, 199–217 (1966).

Findlay, J. M. Direction perception and human fixation eye movements. Vis. Res. 14, 703–711 (1974).

Poletti, M., Listorti, C. & Rucci, M. Stability of the visual world during eye drift. J. Neurosci. 30, 11143–11150 (2010).

Murakami, I. & Cavanagh, P. A jitter after-effect reveals motion-based stabilization of vision. Nature 395, 798–801 (1998).

Nachmias, J. Determiners of the drift of the eye during monocular fixation. J. Opt. Soc. Am. 51, 761–766 (1961).

Steinman, R. M., Haddad, G. M., Skavenski, A. A. & Wyman, D. Miniature eye movement. Science 181, 810–819 (1973).

Poletti, M., Aytekin, M. & Rucci, M. Head-eye coordination at the microscopic scale. Curr. Biol. 25, 3253–3259 (2015).

Intoy, J. & Rucci, M. Finely tuned eye movements enhance visual acuity. Nat. Commun. 11, 1–11 (2020).

Gruber, L. Z. & Ahissar, E. Closed loop motor-sensory dynamics in human vision. PLoS ONE 15, 1–18 (2020).

Lin, Y. C., Intoy, J., Clark, A., Rucci, M. & Victor, J. D. Cognitive influences on fixational eye movements during visual discrimination. J. Vis. 21, 1894 (2021).

Ko, H. K., Snodderly, D. M. & Poletti, M. Eye movements between saccades: measuring ocular drift and tremor. Vis. Res. 122, 93–104 (2016).

Engbert, R., Mergenthaler, K., Sinn, P. & Pikovsky, A. An integrated model of fixational eye movements and microsaccades. Proc. Natl Acad. Sci. USA 108, 765–770 (2011).

Kuang, X., Poletti, M., Victor, J. D. & Rucci, M. Temporal encoding of spatial information during active visual fixation. Curr. Biol. 22, 510–514 (2012).

Krauzlis, R. J. & Lisberger, S. G. A model of visually-guided smooth pursuit eye movements based on behavioral observations. Front. Comput. Neurosci. 1, 265–283 (1994).

Spering, M. & Montagnini, A. Do we track what we see? Common versus independent processing for motion perception and smooth pursuit eye movements: a review. Vis. Res. 51, 836–852 (2011).

de Xivry, J. J. O., Coppe, S., Blohm, G. & Lefevre, P. Kalman filtering naturally accounts for visually guided and predictive smooth pursuit dynamics. J. Neurosci. 33, 17301–17313 (2013).

Rucci, M. & Victor, J. D. The unsteady eye: an information-processing stage, not a bug. Trends Neurosci. 38, 195–206 (2015).

Benardete, E. A. & Kaplan, E. The receptive field of the primate P retinal ganglion cell, I: Linear dynamics. Vis. Neurosci. 14, 169–185 (1997).

Tayama, T. The minimum temporal thresholds for motion detection of grating patterns. Perception 29, 761–769 (2000).

Borghuis, B. G., Tadin, D., Lankheet, M. J., Lappin, J. S. & van de Grind, W. A. Temporal limits of visual motion processing: Psychophysics and neurophysiology. Vision 3, 1–17 (2019).

Johnson, C. A. & Scobey, R. P. Foveal and peripheral displacement thresholds as a function of stimulus luminance, line length and duration of movements. Vis. Res. 20, 709–771 (1980).

Ditchburn, R. W. & Ginsborg, B. L. Vision with a stabilized retinal image. Nature 170, 36–37 (1952).

Epelboim, J. & Kowler, E. Slow control with eccentric targets: evidence against a position-corrective model. Vis. Res. 33, 361–380 (1993).

Sanseverino, E. R., Galletti, C., Maioli, M. G. & Squatrito, S. Single unit responses to visual stimuli in cat cortical Areas 17 and 18: III. Responses to moving stimuli of variable velocity. Arch. Ital. Biol. 117, 248–267 (1979).

Aytekin, M., Victor, J. D. & Rucci, M. The visual input to the retina during natural head-free fixation. J. Neurosci. 34, 12701–12715 (2014).

Shelchkova, N., Tang, C. & Poletti, M. Task-driven visual exploration at the foveal scale. Proc. Natl Acad. Sci. USA 116, 5811–5818 (2019).

Matin, L., Pola, J., Matin, E. & Picoult, E. Vernier discrimination with sequentially-flashed lines: Roles of eye movements, retinal offsets and short-term memory. Vis. Res. 21, 647–656 (1981).

Kowler, E., Rubinstein, J. F., Santos, E. M. & Wang, J. Predictive smooth pursuit eye movements. Annu. Rev. Vis. Sci. 5, 223–246 (2019).

Fooken, J., Kreyenmeier, P. & Spering, M. The role of eye movements in manual interception: a mini-review. Vis. Res. 183, 81–90 (2021).

Galletti, C., Battaglini, P. P. & Fattori, P. Parietal neurons encoding spatial locations in craniotopic coordinates. Exp. Brain Res. 96, 221–229 (1993).

Melcher, D. & Morrone, M. C. Spatiotopic temporal integration of visual motion across saccadic eye movements. Nat. Neurosci. 6, 877–881 (2003).

d’Avossa, G., Tosetti, M., Crespi, S., Biagi, L., Burr, D. C. & Morrone, M. C. Spatiotopic selectivity of BOLD responses to visual motion in human area MT. Nat. Neurosci. 10, 249–255 (2007).

Sperry, R. W. Neural basis of the spontaneous optokinetic response produced by visual inversion. J. Comp. Physiol. Psychol. 43, 482–489 (1950).

Donaldson, I. The functions of the proprioceptors of the eye muscles. Philos. Trans. R. Soc. Lond. B 335, 1685–1754 (2000).

Motter, B. C. & Poggio, G. F. Dynamic stabilization of receptive fields of cortical neurons (VI) during fixation of gaze in the macaques. Exp. Brain Res. 83, 37–43 (1990).

Gur, M. & Snodderly, D. M. Visual receptive fields of neurons in primary visual cortex (V1) move in space with the eye movements of fixation. Vis. Res. 37, 257–265 (1997).

Arathorn, D. W., Stevenson, S. B., Yang, Q., Tiruveedhula, P. & Roorda, A. How the unstable eye sees a stable and moving world. J. Vis. 13, 1–19 (2013).

Riggs, L. A., Ratliff, F., Cornsweet, J. C. & Cornsweet, T. N. The disappearance of steadily fixated visual test objects. J. Opt. Soc. Am. 43, 495–501 (1953).

Rivkind, A., Ram, O., Assa, E., Kreiserman, M. & Ahissar, E. Visual hyperacuity with moving sensor and recurrent neural computations. in International Conference on Learning Representations (2021).

Ilg, U. J., Schumann, S. & Thier, P. Posterior parietal cortex neurons encode target motion in world-centered coordinates. Neuron 43, 145–151 (2004).

Spering, M. & Gegenfurtner, K. R. Contrast and assimilation in motion perception and smooth pursuit eye movements. Brain Res. 98, 1355–1363 (2007).

Freeman, T. C., Champion, R. A. & Warren, P. A. A Bayesian model of perceived head-centered velocity during smooth pursuit eye movement. Curr. Biol. 20, 757–762 (2010).

Bogadhi, A. R., Montagnini, A. & Masson, G. S. Dynamic interaction between retinal and extraretinal signals in motion integration for smooth pursuit. J. Vis. 13, 1–26 (2013).

Santini, F., Redner, G., Iovin, R. & Rucci, M. EyeRIS: a general-purpose system for eye movement contingent display control. Behav. Res. Methods 39, 350–364 (2007).

Acknowledgements

This work was supported by the National Institutes of Health grants EY18363 (M.R.) and EY07977 (J.V.). We thank Claudia Cherici and David Richters for their help in preliminary experiments and Janis Intoy and Martina Poletti for helpful comments and discussions.

Author information

Authors and Affiliations

Contributions

Z.Z. implemented the experiments and the model, collected and analyzed experimental data, and ran simulations. E.A. and M.R. conceived the original idea. J.V. helped interpret data, formalize the ideas, and develop the model. M.R. supervised the project. All authors contributed to the writing of the article.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks Igor Kagan, Maria Morrone and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Source data

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhao, Z., Ahissar, E., Victor, J.D. et al. Inferring visual space from ultra-fine extra-retinal knowledge of gaze position. Nat Commun 14, 269 (2023). https://doi.org/10.1038/s41467-023-35834-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-023-35834-4

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.